Can You Close the Performance Gap Between GPU and CPU for Deep Learning Models? - Deci

Por um escritor misterioso

Descrição

How can we optimize CPU for deep learning models' performance? This post discusses model efficiency and the gap between GPU and CPU inference. Read on.

Brandon Johnson on LinkedIn: CPU, GPU…..DPU?

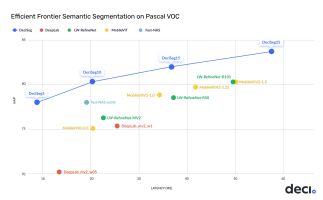

Deci Advanced semantic segmentation models deliver 2x lower latency, 3-7% higher accuracy

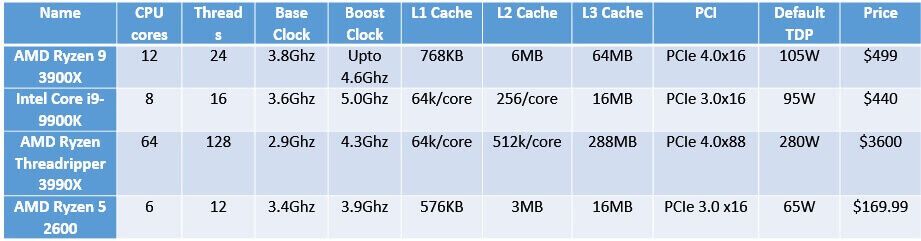

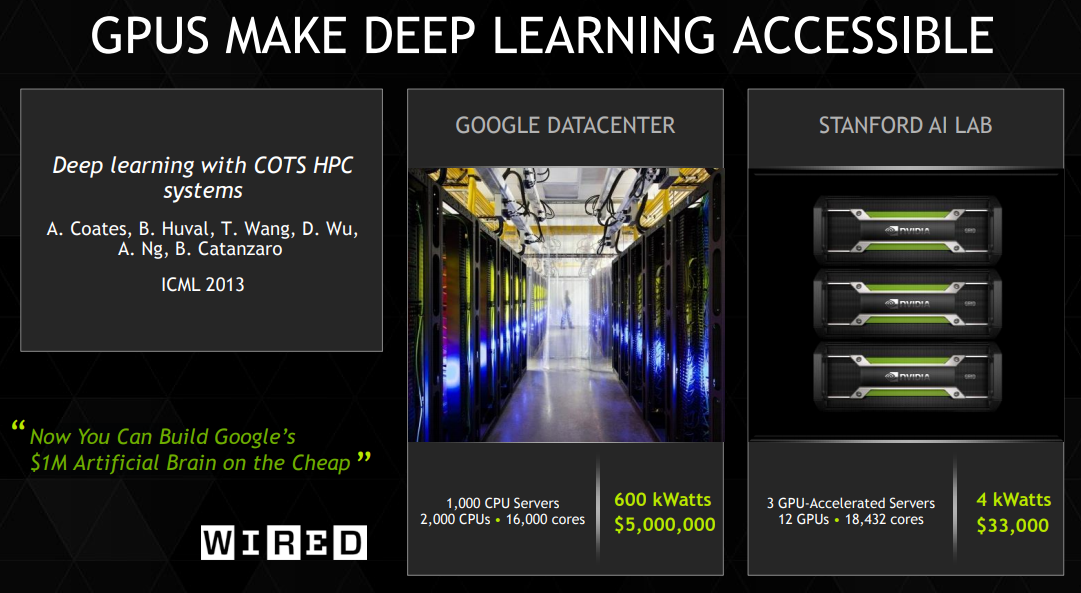

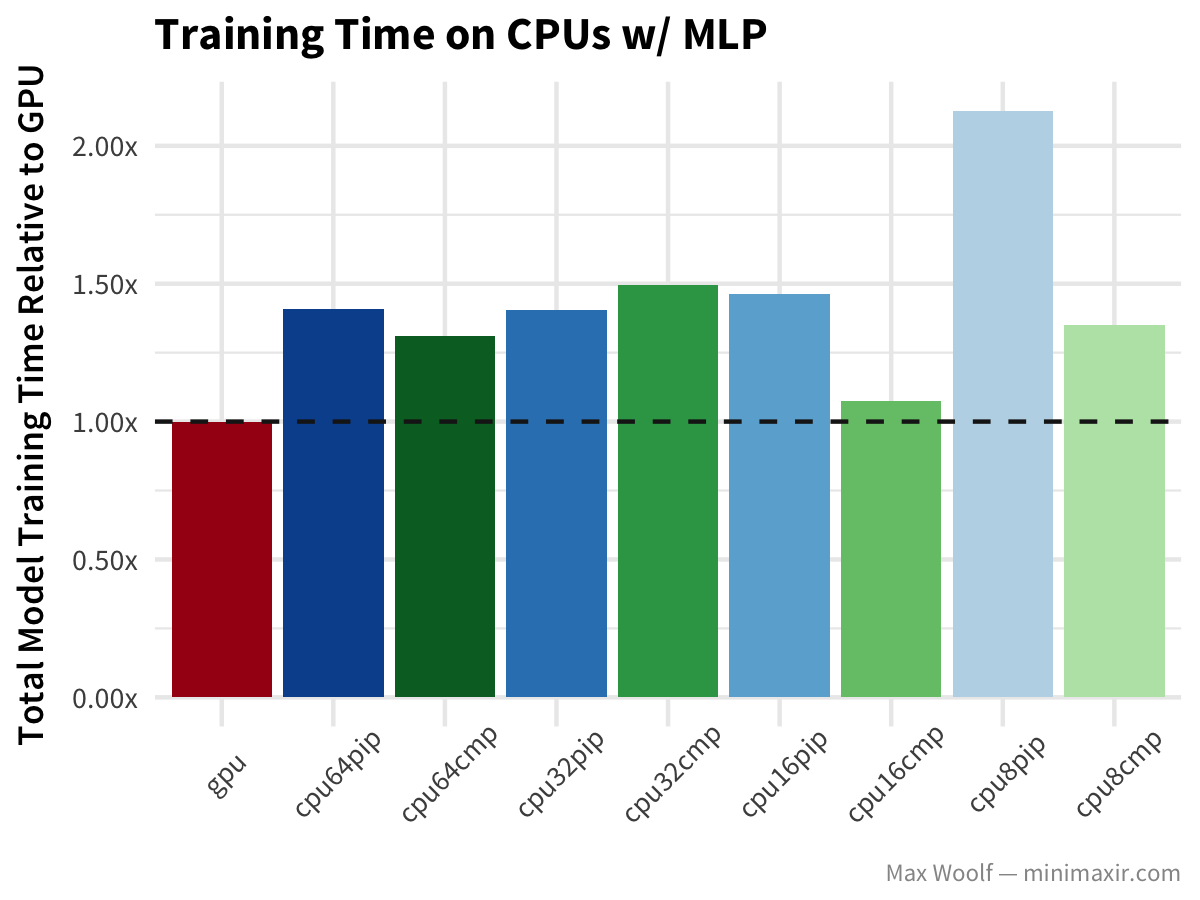

CPU vs GPU in Machine Learning Algorithms: Which is Better?

Is there a way to Train simultaneously on CPU and GPU? (e.g. 2 separate neural network models) - Quora

Can You Close the Performance Gap Between GPU and CPU for Deep Learning Models? - Deci

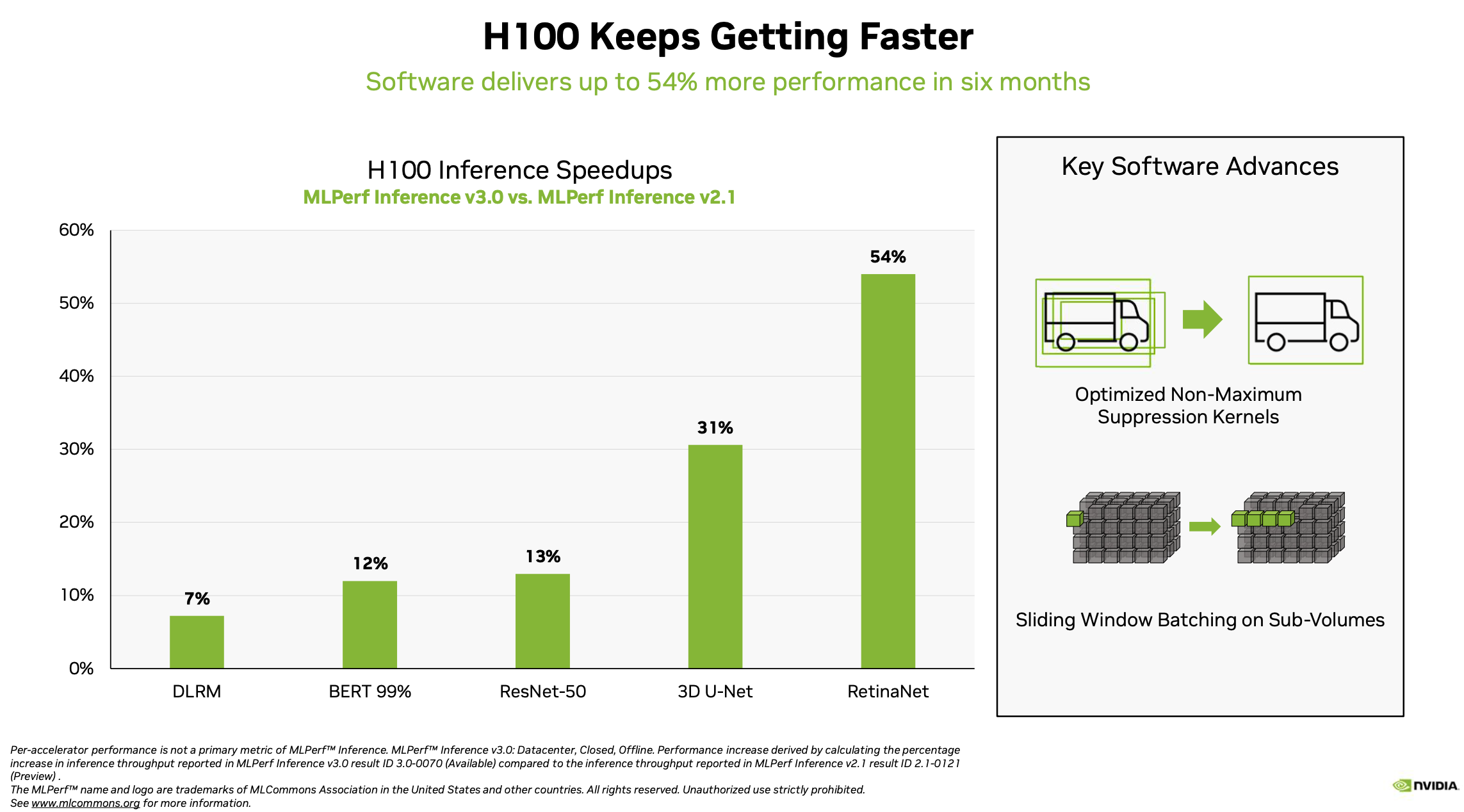

NVIDIA TensorRT-LLM Supercharges Large Language Model Inference on NVIDIA H100 GPUs

Monitor and Improve GPU Usage for Training Deep Learning Models, by Lukas Biewald

Hardware for Deep Learning. Part 3: GPU, by Grigory Sapunov

Benchmarking TensorFlow on Cloud CPUs: Cheaper Deep Learning than Cloud GPUs

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT

de

por adulto (o preço varia de acordo com o tamanho do grupo)